Evolving towards multi-service enterprise edge compute and cloud virtualization infrastructure

There’s a lot of talk about the ‘edge’ these days—edge compute, edge cloud, near edge, far edge, the list goes on.

We see these as just different approaches to ensure that enterprise employees have ‘always-on’ access to the data, content, and applications they need to do their jobs—regardless of their physical location. These edge approaches also deliver a higher-quality customer experience and meet application specific latency and bandwidth needs.

For over a decade, enterprises accomplished this task by moving many applications into public, private, and hybrid cloud compute environments. But this traditionally involved a cloud approach wherein enterprise compute and storage applications were hosted in far-off public data centers. Remote enterprise users accessed these applications and content through network connectivity services aggregated through headquarters or the company data center.

Three key trends are driving the need to move applications, content, and data closer to enterprise users:

- The increasing number of work locations, including remote offices, work-from-home, and mobile workers

- The rapid expansion and diversity of off-the-shelf and proprietary business applications

- Stricter latency requirements of key transformation applications like Artificial Intelligence (AI), augmented and virtual reality, autonomous infrastructure, robotics, gaming, video, and IoT

Enterprises are in different stages of their cloud evolutions. The data-user requirements, application demands, and stage of evolution determine customized solutions for a given enterprise.

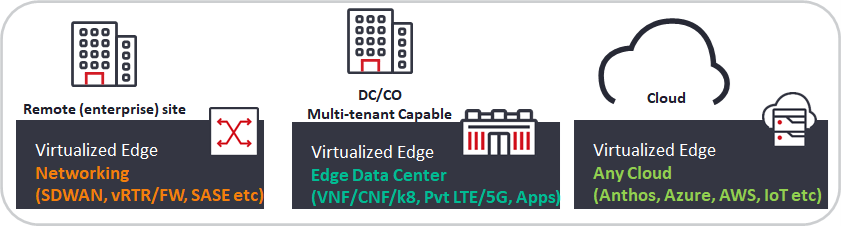

Some are just looking to optimize the way they connect their dispersed branch locations to applications in a traditional cloud compute environment. In this scenario, they’re replacing static network connectivity and security with Software-Defined WAN (SD-WAN) that enables them to optimize and secure network traffic across various underlying connectivity types, including 5G.

In addition, they’re replacing legacy physical network devices, such as routers, firewalls, and load balancers, in each location with Virtual Network Functions (VNFs) or Containerized Network Functions (CNFs) hosted on a single edge device that combines a virtual connectivity switch with x86 compute server modules.

Other enterprises are looking to expand this concept to include virtualized business applications like point of sale, inventory, video, analytics, and AI, on a single higher capacity edge compute device either on premises or in a local micro data center.

Some enterprises are taking a further step by adding multi-tenant, multi-cloud integration capabilities hosted on the single device and enabled by a robust software stack, which enables each branch, work-from-home, or mobile worker to access applications from a single edge device.

There are many benefits of this type of ‘virtualized edge solution’ to the enterprise, including:

- Reducing WAN complexity and improving application performance

- Minimizing updates and outage maintenance windows

- Avoiding disruption of branch operations

- Reducing CAPEX and OPEX

- Increasing IT staff effectiveness and agility

A small percentage of enterprises will choose to deploy and manage this ‘virtualized edge infrastructure’ in each remote branch location or local micro data center hub. Most enterprises would rather purchase it on an ‘as-a-service’ basis from a Communications Service Provider (CSP), a Managed Service Provider (MSP), or Cloud Service Provider. Regardless of which ‘cloud-native’ strategy an enterprise chooses to follow, there a few key practices that can make the journey simpler, less costly, and less risky.

- To the greatest extent possible, look for solutions that follow ‘open’ design principles that enable a multi-vendor environment. For example, the enabling software stack should be fungible and secure, while able to serve a multi-tenant infrastructure. It should also be able to steer different traffic types and have open north-bound APIs such as NETCONF/Yang and REST, while helping stitch network underlays for building an end-to-end ecosystem.

- Seek partners that are vendor-agnostic, offering a strong portfolio of multiple VNFs and CNFs, and a robust multi-tenant, multi-service edge cloud software stack. This provides enterprises the greatest degree of choice and flexibility.

- Find an orchestration software that provides an open and modular solution for instantiating, managing, and service chaining VNFs and CNFs. The software should also be able to intelligently automate end-to-end service lifecycle management—from inventory and design to orchestration and assurance—across the multi-vendor, multi-domain infrastructure.

The evolution to a multi-service virtualized edge cloud environment is often not a simple task for either an enterprise or a service provider. Migrating from a rigid hardware-centric environment to an agile, software-based, disaggregated environment can be a complex endeavor, especially when there are multiple VNFs, new containerized applications, and multi-tenant cloud services from a variety of suppliers involved. Enterprises will either need to ensure in-house expertise or seek an experienced systems integration partner to create and validate the solution design, conduct the staging and site deployment, and perform any required lifecycle management or managed operations to achieve the full benefits of a multi-service virtualized edge cloud solution.